WD-NearbyItem

browse Wikidata Items nearby

This year, I originally planned to finally ‘retire’ from competing in the Wikidata Datathon contest.

What is the Wikidata Datathon, anyway?

It’s a local online competition where participants race to add the most byte counts to the Wikidata database within a specified theme and set of topics. It can last several days, and participants ideally stay online as long as possible to add more Wikidata items, sometimes manually and sometimes using bot scripts such as QuickStatements. Winners get fame, glory, and prizes. I have never won a grand prize before (I’m already at my body’s physical limit for editing Wikidata items) but I have received plenty of Wikidata merchandise as consolation prizes, ranging from hats, mugs, bags, stickers, keychains, to shirts. My room is full of Wikidata merchandise because of this.

But since I never won a grand prize, I really planned to retire for real this year.

That’s why I started blocking the competition officials’ Instagram account, to make sure I would never see another Datathon season again.

But out of the blue, I received the information anyway, straight to my email.

Apparently, someone added my email address to the mailing list, so I was exposed to that infohazard without my own consent.

At that time, I decided this would be my final race. After this, I was going to quit Wikidata Datathon for real. Just… one more turn.

This month’s Datathon theme was “ports and fishing ports.”

Participants were given a one-week grace period before the actual race on Saturday, Sunday, and Monday. During this grace period, I planned my race.

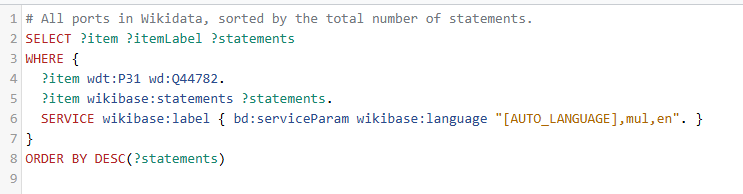

First, I made a SPARQL query to get all Wikidata items that have an “instance of: port” statement, then sorted them based on how many statements each item had.

I wanted to find the elusive “Wikidata model item,” an item with tons of statements that could teach us the ideal data structure within this theme.

I failed to find that model item. Apparently, port items on Wikidata are not well maintained, even though WikiProject Ports is flourishing on the English Wikipedia.

So I had to check the queried items one by one, trying to find the missing puzzle pieces: the right properties to explain each port item in depth.

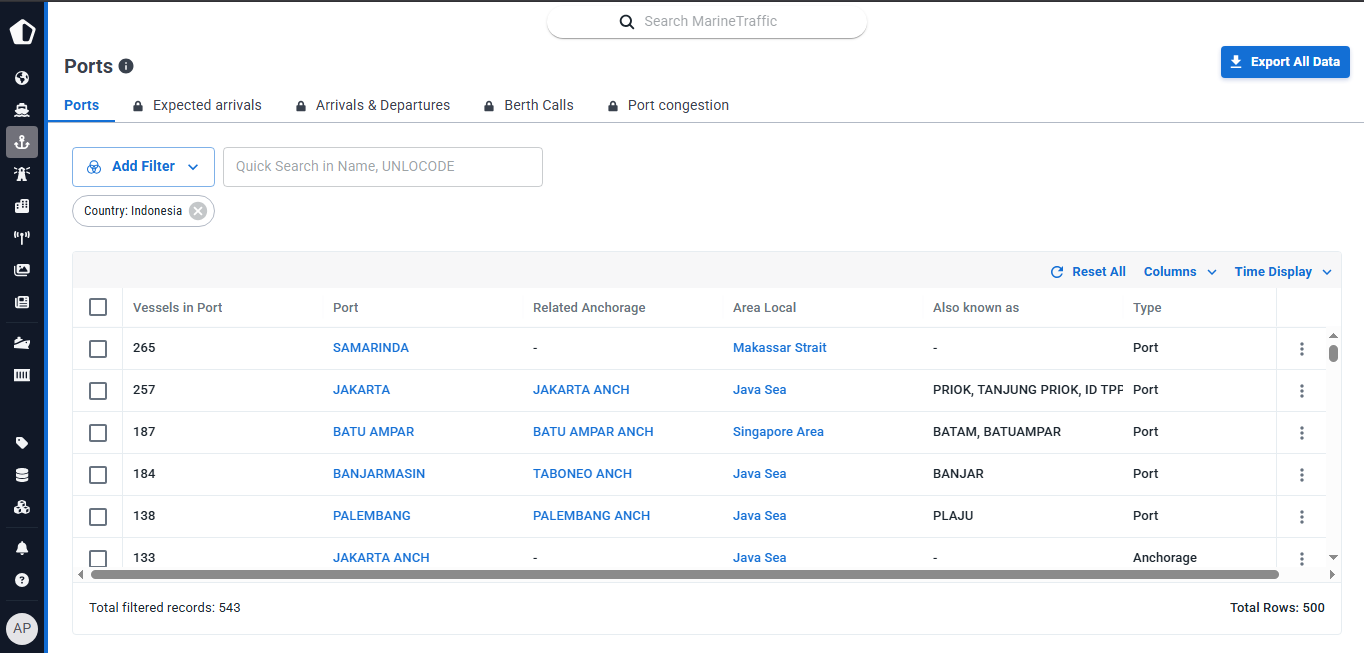

Then I hit the jackpot: the MarineTraffic port ID.

MarineTraffic provides a real-time database showing how many ships are currently standing by in each port. That allowed me to see which ports were actually active at the time. I gathered data from MarineTraffic and sorted it based on how many ships were standing by in each port on the night I collected the data.

This was good enough for my preparation phase. When the race started, I planned to begin from the bottom of that list, starting with the most obscure ports first, so I wouldn’t conflict with other racers. If I started from the top, with the most popular ports, there would be a high chance that other racers would edit the same items.

Finally, the race began.

I started on Saturday early morning and worked until noon.

Before shutting down my PC, I checked the current standings.

I was in second place. A good start.

But wait, who was in first place?

I checked their edit log.

Damn. He had wielded a mass-edit script the night before. That explained how he could be so far ahead from the very beginning of the race.

My spirit began to crack, but I still wanted to continue. I was in second place, still on the podium. Maybe I could overtake him over the next two days.

Then I asked myself: why don’t I use a mass-edit script too?

No.

This is exactly why I planned to retire this year.

For several years now, I’ve known that the Wikidata infrastructure has been suffering from massive data overload. I heard that their Blazegraph backend has already reached its theoretical limit and needs to be replaced urgently. I doubt the original Blazegraph developers ever imagined their creation would be used globally as Wikidata’s backend. I’ve followed this issue for years, but it seems they still haven’t found a better replacement. As a temporary fix, they migrated a large portion of data items related to scientific articles into a separate graph database.

In short, I won’t spam Wikidata with mass scripts and make the situation worse. I make sure that every item I contribute in this Datathon is meaningful, well researched, and built with care.

“But what if you lose the grand prize again to bot users?”

Well, that’s exactly why I’m retiring from this game for real.

Alright, moving on to the next day.

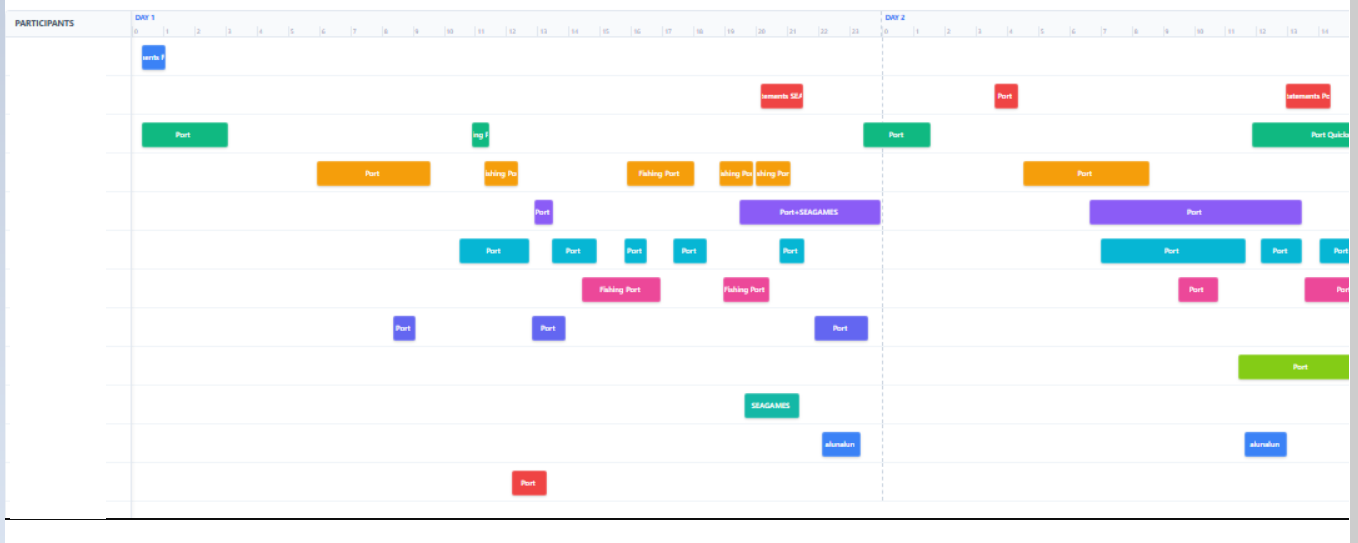

I was now in third place. Two bot users occupied first and second place. I even made a Gantt chart visualizer to compare my progress with that of my enemies.

My spirit began to crack again, but I was still on the podium, so I kept fighting.

The next day came.

I was now in fourth place. Three bot users held first, second, and third place.

Alright. I gave up.

I failed to win the grand prize, even in my last race.

But there was still one day left, so I raced until the very end without any expectation of winning. I didn’t even consult the Gantt chart visualizer I had made anymore.

During the final night, I found some really interesting data. This was no longer about the Datathon competition, just pure research driven by curiosity.

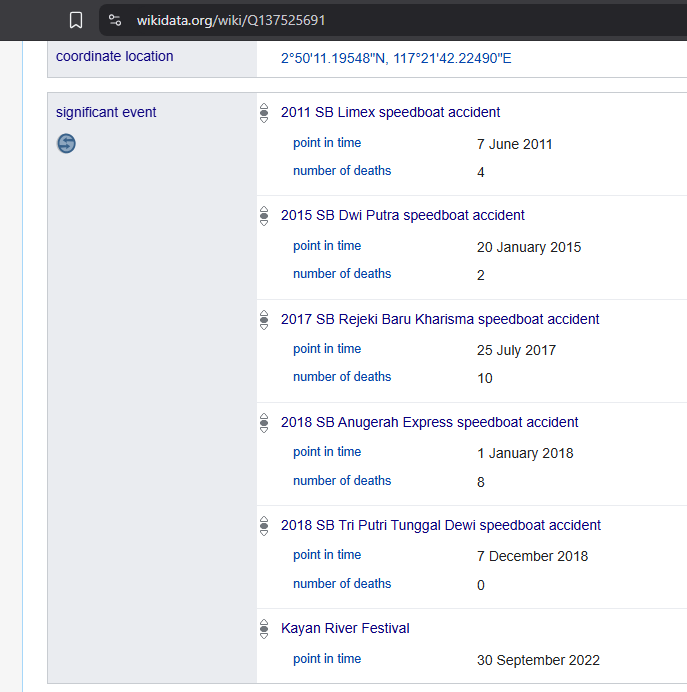

There were plenty of shipwreck cases at a specific port. Far too many.

That made me think: would it be good if we started documenting car, ship, and train accidents and storing them on Wikidata?

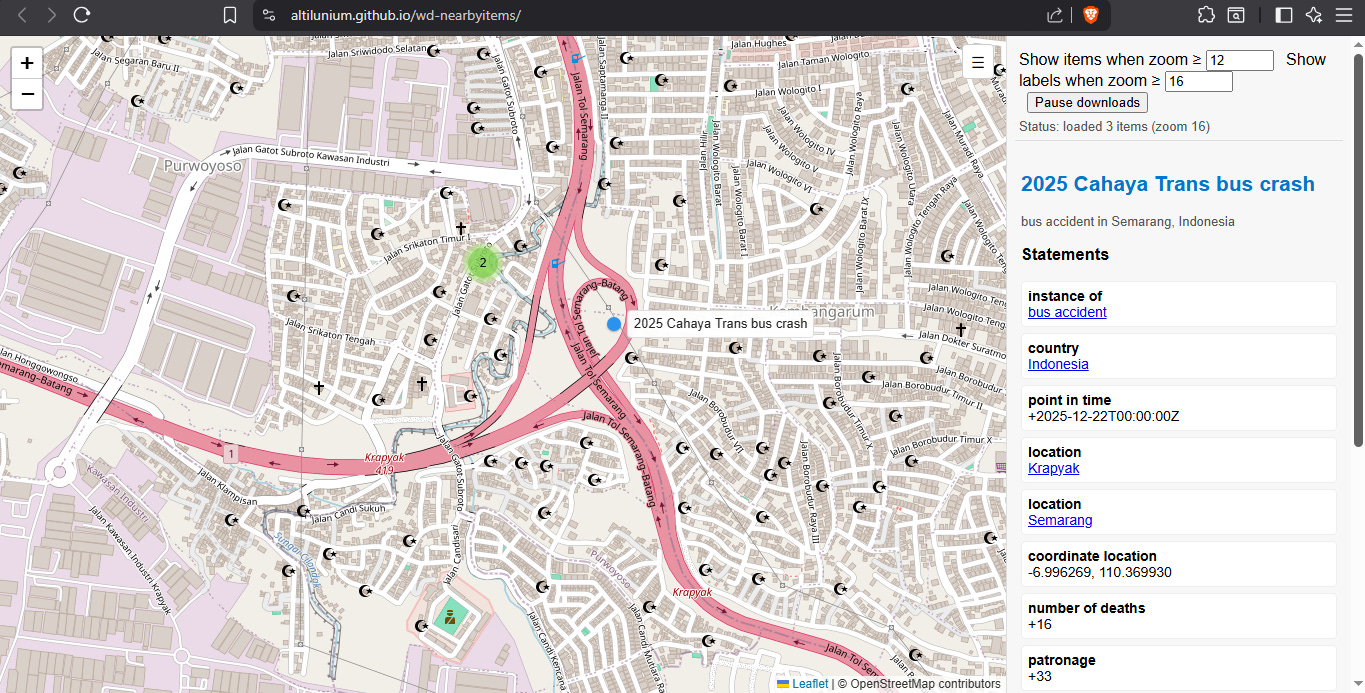

The next day, there was a bus accident in Semarang with many casualties. Based on available news coverage, I tried to construct a Wikidata model item for an “accident event.”

Then I thought: if we collect enough events and coordinates, could we build a dashboard showing all past accidents around a specific road, river, or sea?

Then I thought again: forget accidents, what if we just show all Wikidata items around a map area?

I asked ChatGPT whether such an app already existed. ChatGPT said it hadn’t been done yet. There are apps that show Wikipedia articles near a coordinate, but not Wikidata items. I wasn’t sure whether I should trust that answer, but I decided to build it anyway.

And it’s done : https://altilunium.github.io/wd-nearbyitems/

Public service announcement:

Please use the ‘pause download’ button frequently. It temporarily stops the app from sending too many queries to the Wikidata server once you have already downloaded plenty of data. After downloading enough, take some time to savor it and enjoy the data one by one before greedily asking for more to be displayed on the screen. You can always toggle the pause button off again to download new data.

Pro tip: if you want to download data, do it at a higher zoom level, not a lower one. There is a hidden implementation detail behind this. The app queries all Wikidata items around a single coordinate (the center of what you see on the screen) within a certain radius, which is actually quite small. Because of this quirk, you are not downloading the entire map area visible on the screen, but only a small chunk around the center, with some radius padding. This is why downloading data works much better at higher zoom levels.

There is wdlocator already